In days when the IT industry is in full power we have Machine Learning (ML) as segment that we meet every day even not knowing.

The deployment of fast and powerful computers with huge computational power allows this segment of Artificial Intelligence (AI) to grow even faster. I will give a short brief about Machine Learning starting with some definitions, short history when it is used and will check some good frameworks that allow machine learning.

Definition

There are a lot of descriptions on the internet about Machine Learning but I found this as one of the best (source: SAS):

“Machine learning is a method of data analysis that automates analytical model building. It is a branch of artificial intelligence based on the idea that systems can learn from data, identify patterns and make decisions with minimal human intervention.”

We must give enough data to the computer to expect good decision from the computer system.

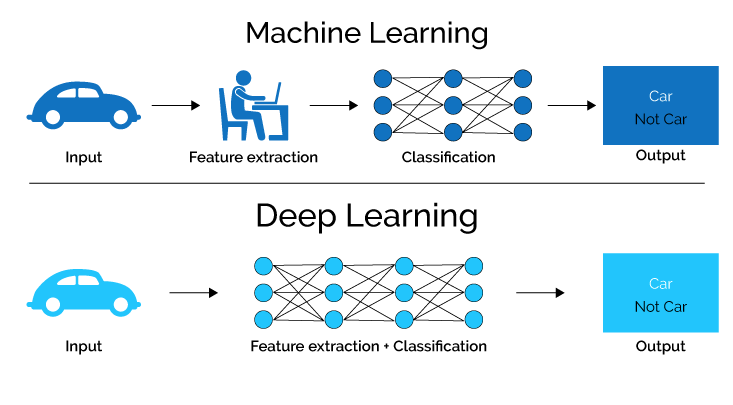

Machine learning uses many techniques to create algorithms to learn and make predictions from data sets. It is used in data mining which is a technique to discover patterns and models in data sets where relationships are previously unknown.

History

Because of the power of the new computing systems, machine learning today is different from the machine learning of the past. Machine Learning was born from pattern recognition and the theory that computers can learn without being programmed to perform specific tasks. The main goal of the researchers interested in artificial intelligence was to see if computers could learn from data.

One of the most important aspects of ML is the iterative aspect. The models created with ML tools are exposed to new data and they will adapt to the changes without problems. The main characteristic of ML systems is to learn from previous computations and to produce reliable, repeatable decisions and results. This science is not new – but in this moment it has gained fresh momentum.

Arthur Samuel is the first scientist who use the term Machine Learning in 1959 while he worked at IBM. As a scientific endeavour, machine learning grew out of the quest for artificial intelligence. The researchers on the beginning were trying to answer the question “can somehow machines be programmed to learn from data?”. The scientists used various symbolic methods. The term “neural networks” is also correlated with machine learning. Inspired from the human biological neural connections that constitute the brain, the scientist construct neural network framework to connect many algorithms in order to process huge data inputs.

I will give a listed overview of the most significant processes that happened in the past 70 years:

- 1950s – The first machine learning researches are conducted using simple algorithms.

- 1960s – Using the Bayesian methods for probabilistic inference in machine learning.

- 1970s – ‘AI Winter’ this is a period of stagnation of ML.

- 1980s – Rediscovery of back-propagation causes a resurgence in machine learning research.

- 1990s – Before 90-ties machine learning used knowledge-driven approach. This is changed in 90-ties to a data-driven approach. Scientists begin creating programs for computers to analyze large amounts of data and draw conclusions – or “learn” – from the results. Support vector machines (SVMs) and recurrent neural networks (RNNs) become popular. The fields of computational complexity via neural networks and super-Turing computation started.

- 2000s – Support Vector Clustering and other Kernel methods and unsupervised machine learning methods become widespread.

- 2010s – Deep learning growth will lead machine learning to become integral part of many software services and applications.

Popular Frameworks

There are a lot of tools for Machine Learning. I will give short intro about few of them:

TensorFlow

On the official site TensorFlow is defined as open source software library for high performance numerical computation. This library has flexible architecture that allows easy deployment of computation across a variety of platforms, and from desktops to clusters of servers to mobile and edge devices. TensorFlow was originally developed by researchers and engineers from the Google Brain team within Google’s AI organization.

There are a lot of giants in the world which use TensorFlow. Among the most popular are Uber, Airbnb, Google, SAP, Snapchat, Nvidia, Coca-Cola, Twitter…

Keras

Keras is a Python deep learning library capable of running on top off Theano, TensorFlow, or CNTK. It’s developed by Francois Chollet. This library allow to the data scientists the ability to run machine learning experiments fast.

On the official site of Keras states to use Keras if you need deep learning library that:

- Allows easy and fast prototyping

- Supports both convolutional networks and recurrent networks, as well as combinations of the two

- Runs seamlessly on CPU and GPU

Pandas

Pandas is popular ML library. The goal of Pandas is to fetch and prepare the data that will be used later in other ML libraries like TensorFlow.

It supports many different complex operations over datasets. Pandas can aggregate data of different types like SQL databases, text, CSV, Excel, JSON files, and many other formats.

The data is first put in memory and after that a lot of operations can be make like analysing, transforming and backfilling the missed values.

A lot of SQL-like operations over datasets can be made with Pandas (e.g. joining, grouping, aggregating, reshaping, pivoting). Pandas offers also statistical functions to perform a simple analysis.

Conclusion

Most of the industries that use big data have recognized the value of using machine learning and they use it as tools to grow up. Machine Learning is widespread in financial institutions, governments, health care industries, retail-, oil- and gas industries, transportation…